By Yoree Koh

As Brenton Tarrant drove away from the New Zealand mosque where

he allegedly went on a shooting spree, only 10 people were tuned

into his live broadcast of the rampage on Facebook Live, according

to archived versions of his page.

But the video, which shows dozens of people inside the Al Noor

mosque in Christchurch being gunned down, has likely been viewed

millions of times in various formats across the internet. The

footage was recorded, repackaged and reposted on mainstream sites,

fringe destinations with looser restrictions and in the web's

darkest corners only accessible with special software.

Facebook Inc. and Google's YouTube were still working this

weekend to keep the video off their own sites.

The vast cloning of the footage underlines a stark reality in

the era of live online broadcasting: These videos can't be cut

off.

Artificial intelligence software isn't powerful enough to fully

detect violent content as it is being broadcast, according to

researchers. And widely available software enables people to

instantly record and create a copy of an online video. That means

the footage now lives on people's phones and computers, showing how

little control the major tech platforms have over the fate of a

video once it airs.

Archived versions of Mr. Tarrant's Facebook page indicate the

video was removed only minutes after it stopped airing, according

to social-media intelligence company Storyful, which viewed

archived versions of his page. Facebook said it was alerted by New

Zealand police shortly after the live stream began.

But by then, it was too late. Anyone with a link to the video

could have recorded it.

Facebook said that in the first 24 hours after the attack it

blocked or removed 1.5 million copies of the video from its site.

About 80% of those videos were cut off while they were being

uploaded to Facebook. That means 300,000 versions of the video

still sneaked through.

YouTube, owned by Google parent Alphabet Inc., said it has taken

down tens of thousands of postings of the video, while Twitter Inc.

said it suspended Mr. Tarrant's account and was working to remove

the video.

Facebook, Twitter and YouTube have stepped up their investments

in artificial intelligence tools and human moderators to detect and

remove content that violates their guidelines. These internet

giants have made progress in stamping out terrorist propaganda from

Islamic State militants, for example. They employ a shared database

of terrorist content that is assigned digital fingerprints called

"hashes" that detect visual similarities and automatically prevent

the content from being uploaded.

But these tactics can be circumvented if the footage is

doctored. Versions of Mr. Tarrant's video, for example, were edited

to imitate a first-person shooter game and appeared on Discord, a

messaging app for videogamers.

The software can also struggle to catch videos that aren't the

original, such as a recording from a mobile phone camera of the

video playing in a web browser. To try to tackle this, Facebook

says it is employing audio technology and also hashing other

uploads.

Live online broadcasting, which is exploding in popularity,

compounds the problem because it is challenging to monitor in real

time. The algorithms aren't yet equipped to decipher reality from

fiction, or detect certain moving images like guns held in

different positions.

Over the weekend, links to different versions and clips of the

rampage were readily available on multiple fringe sites such as

Gab, BestGore.com and DTube, an alternative to YouTube that has

little to no moderation. Links on the storage site Dropbox were

being circulated and still active Sunday.

The video was given a supercharged boost because of the way Mr.

Tarrant promoted it. Before the shooting spree, Mr. Tarrant

apparently posted his alleged intention to attack the mosque, and

provided links to the live stream and an accompanying manifesto

filled with white supremacist conspiracy theories on 8chan, an

anonymous messaging forum favored by extremist groups.

Part of the calculation, say internet researchers, was to take

advantage of 8chan's culture of archiving sensitive videos. By

giving a heads up to the 8chan community about the attack and then

posting a link to the live stream, Mr. Tarrant ensured that the

video couldn't be permanently deleted.

"You have these groups of people who consider themselves quasi

movements online and they believe they own the internet and as a

result these calls to action are almost rote memory," said Joan

Donovan, director of the Technology and Social Change Research

Project at Harvard University's Shorenstein Center. "They're just

part of the culture, so if someone says that they're going to

commit some kind of atrocity then you will see this downloading and

reuploading practice happen."

That could explain why, according to YouTube, the site has seen

an unprecedented volume of attempts to post original and modified

versions of the shooting video. A YouTube spokeswoman said that if

it appears a criminal act will take place the website will end a

live stream and may terminate the channel showing it. YouTube now

has 10,000 workers devoted to addressing content that violates its

rules.

Facebook appears to have become quicker at removing live

broadcasts after facing criticism it took too long after other

violent incidents. In 2017, a man in Thailand broadcast the murder

of his infant daughter and the video remained on the site for

roughly 24 hours.

This time, Facebook said its content-policy team immediately

designated the New Zealand shooting as a terrorist attack, meaning

any praise or support of the event violates the company's rules and

is removed.

But it is still having to improvise. Facebook said it initially

allowed clips and images showing nonviolent scenes of Mr. Tarrant's

video to stay up, but it has since reversed course and is now

removing all of his footage. On Sunday, New Zealand's government

emphasized it was a crime to distribute or possess the video

because it considers it objectionable under law.

Highlighting the game of Whac-A-Mole, a two-minute clip of the

video showing people getting shot was still viewable on Facebook as

of Sunday afternoon.

Write to Yoree Koh at yoree.koh@wsj.com

(END) Dow Jones Newswires

March 17, 2019 19:15 ET (23:15 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

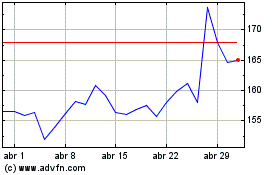

Alphabet (NASDAQ:GOOG)

Gráfico Histórico do Ativo

De Mar 2024 até Abr 2024

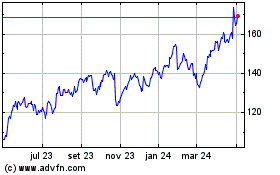

Alphabet (NASDAQ:GOOG)

Gráfico Histórico do Ativo

De Abr 2023 até Abr 2024