Three new Amazon SageMaker HyperPod

capabilities, and the addition of popular AI applications from AWS

Partners directly in SageMaker, help customers remove

undifferentiated heavy lifting across the AI development lifecycle,

making it faster and easier to build, train, and deploy models

At AWS re:Invent, Amazon Web Services, Inc. (AWS), an

Amazon.com, Inc. company (NASDAQ: AMZN), today announced four new

innovations for Amazon SageMaker AI to help customers get started

faster with popular publicly available models, maximize training

efficiency, lower costs, and use their preferred tools to

accelerate generative artificial intelligence (AI) model

development. Amazon SageMaker AI is an end-to-end service used by

hundreds of thousands of customers to help build, train, and deploy

AI models for any use case with fully managed infrastructure,

tools, and workflows.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20241204660610/en/

HyperPod AI Partner Apps in SageMaker

(Graphic: Business Wire)

- Three powerful new additions to Amazon SageMaker HyperPod make

it easier for customers to quickly get started with training some

of today’s most popular publicly available models, save weeks of

model training time with flexible training plans, and maximize

compute resource utilization to reduce costs by up to 40%.

- SageMaker customers can now easily and securely discover,

deploy, and use fully managed generative AI and machine learning

(ML) development applications from AWS partners, such as Comet,

Deepchecks, Fiddler AI, and Lakera, directly in SageMaker, giving

them the flexibility to choose the tools that work best for

them.

- Articul8, Commonwealth Bank of Australia, Fidelity, Hippocratic

AI, Luma AI, NatWest, NinjaTech AI, OpenBabylon, Perplexity, Ping

Identity, Salesforce, and Thomson Reuters are among the customers

using new SageMaker capabilities to accelerate generative AI model

development.

“AWS launched Amazon SageMaker seven years ago to simplify the

process of building, training, and deploying AI models, so

organizations of all sizes could access and scale their use of AI

and ML,” said Dr. Baskar Sridharan, vice president of AI/ML

Services and Infrastructure at AWS. “With the rise of generative

AI, SageMaker continues to innovate at a rapid pace and has already

launched more than 140 capabilities since 2023 to help customers

like Intuit, Perplexity, and Rocket Mortgage build foundation

models faster. With today’s announcements, we’re offering customers

the most performant and cost-efficient model development

infrastructure possible to help them accelerate the pace at which

they deploy generative AI workloads into production.”

SageMaker HyperPod: The infrastructure of choice to train

generative AI models

With the advent of generative AI, the process of building,

training, and deploying ML models has become significantly more

difficult, requiring deep AI expertise, access to massive amounts

of data, and the creation and management of large clusters of

compute. Additionally, customers need to develop specialized code

to distribute training across the clusters, continuously inspect

and optimize their model, and manually fix hardware issues, all

while trying to manage timelines and costs. This is why AWS created

SageMaker HyperPod, which helps customers efficiently scale

generative AI model development across thousands of AI

accelerators, reducing time to train foundation models by up to

40%. Leading startups such as Writer, Luma AI, and Perplexity, and

large enterprises such as Thomson Reuters and Salesforce, are

accelerating model development thanks to SageMaker HyperPod. Amazon

also used SageMaker HyperPod to train the new Amazon Nova models,

reducing their training costs, improving the performance of their

training infrastructure, and saving them months of manual work that

would have been spent setting up their cluster and managing the

end-to-end process.

Now, even more organizations want to fine-tune popular publicly

available models or train their own specialized models to transform

their businesses and applications with generative AI. That is why

SageMaker HyperPod continues to innovate to make it easier, faster,

and more cost-efficient for customers to build, train, and deploy

these models at scale with new innovations, including:

- New recipes help customers get started faster: Many

customers want to take advantage of popular publicly available

models, like Llama and Mistral, that can be customized to a

specific use case using their organization’s data. However, it can

take weeks of iterative testing to optimize training performance,

including experimenting with different algorithms, carefully

refining parameters, observing the impact on training, debugging

issues, and benchmarking performance. To help customers get started

in minutes, SageMaker HyperPod now provides access to more than 30

curated model training recipes for some of today’s most popular

publicly available models, including Llama 3.2 90B, Llama 3.1 405B,

and Mistral 8x22B. These recipes greatly simplify the process of

getting started for customers, automatically loading training

datasets, applying distributed training techniques, and configuring

the system for efficient checkpointing and recovery from

infrastructure failures. This empowers customers of all skill

levels to achieve improved price performance for model training on

AWS infrastructure from the start, eliminating weeks of iterative

evaluation and testing. Customers can browse available training

recipes via the SageMaker GitHub repository, adjust parameters to

suit their customization needs, and deploy within minutes.

Additionally, with a simple one-line edit, customers can seamlessly

switch between GPU- or Trainium-based instances to further optimize

price performance. Researchers at Salesforce were looking for ways

to quickly get started with foundation model training and

fine-tuning, without having to worry about the infrastructure, or

spending weeks optimizing their training stack for each new model.

With Amazon SageMaker HyperPod recipes, they can conduct rapid

prototyping when customizing foundation models. Now, Salesforce’s

AI Research teams are able to get started in minutes with a variety

of pre-training and fine-tuning recipes, and can operationalize

foundation models with high performance.

- Flexible training plans make it easy to meet training

timelines and budgets: While infrastructure innovations help

drive down costs and allow customers to train models more

efficiently, customers must still plan and manage the compute

capacity required to complete their training tasks on time and

within budget. That is why AWS is launching flexible training plans

for SageMaker HyperPod. In a few clicks, customers can specify

their budget, desired completion date, and maximum amount of

compute resources they need. SageMaker HyperPod then automatically

reserves capacity, sets up clusters, and creates model training

jobs, saving teams weeks of model training time. This reduces the

uncertainty customers face when trying to acquire large clusters of

compute to complete model development tasks. In cases where the

proposed training plan does not meet the specified time, budget, or

compute requirements, SageMaker HyperPod suggests alternate plans,

like extending the date range, adding more compute, or conducting

the training in a different AWS Region, as the next best option.

Once the plan is approved, SageMaker automatically provisions the

infrastructure and runs the training jobs. SageMaker uses Amazon

Elastic Compute Cloud (EC2) Capacity Blocks to reserve the right

amount of accelerated compute instances needed to complete the

training job in time. By efficiently pausing and resuming training

jobs based on when those capacity blocks are available, SageMaker

HyperPod helps make sure customers have access to the compute

resources they need to complete the job on time, all without manual

intervention. Hippocratic AI develops safety-focused large language

models (LLMs) for healthcare. To train several of their models,

Hippocratic AI used SageMaker HyperPod flexible training plans to

gain access to accelerated compute resources they needed to

complete their training tasks on time. This helped them accelerate

their model training speed by 4x and more efficiently scale their

solution to accommodate hundreds of use cases. Developers and data

scientists at OpenBabylon, an AI company that customizes LLMs for

underrepresented languages, have has been using SageMaker HyperPod

flexible training plans to streamline their access to GPU resources

to run large scale experiments. Using SageMaker HyperPod, they

conducted 100 large scale model training experiments that allowed

them to build a model that achieved state-of-the-art results in

English-to-Ukrainian translation. Thanks to SageMaker HyperPod,

OpenBabylon was able to achieve this breakthrough on time while

effectively managing costs.

- Task governance maximizes accelerator utilization:

Increasingly, organizations are provisioning large amounts of

accelerated compute capacity for model training. These compute

resources involved are expensive and limited, so customers need a

way to govern usage to ensure their compute resources are

prioritized for the most critical model development tasks,

including avoiding any wastage or underutilization. Without proper

controls over task prioritization and resource allocation, some

projects end up stalling due to lack of resources, while others

leave resources underutilized. This creates a significant burden

for administrators, who must constantly re-plan resource

allocation, while data scientists struggle to make progress. This

prevents organizations from bringing AI innovations to market

quickly and leads to cost overruns. With SageMaker HyperPod task

governance, customers can maximize accelerator utilization for

model training, fine-tuning, and inference, reducing model

development costs by up to 40%. With a few clicks, customers can

easily define priorities for different tasks and set up limits for

how many compute resources each team or project can use. Once

customers set limits across different teams and projects, SageMaker

HyperPod will allocate the relevant resources, automatically

managing the task queue to ensure the most critical work is

prioritized. For example, if a customer urgently needs more compute

for an inference task powering a customer-facing service, but all

compute resources are in use, SageMaker HyperPod will automatically

free up underutilized compute resources, or those assigned to

non-urgent tasks, to make sure the urgent inference task gets the

resources it needs. When this happens, SageMaker HyperPod

automatically pauses the non-urgent tasks, saves the checkpoint so

that all completed work is intact, and automatically resumes the

task from the last-saved checkpoint once more resources are

available, ensuring customers make the most of their compute. As a

fast-growing startup that helps enterprises build their own

generative AI applications, Articul8 AI needs to constantly

optimize its compute environment to allocate its resources as

efficiently as possible. Using the new task governance capability

in SageMaker HyperPod, the company has seen a significant

improvement in GPU utilization, resulting in reduced idle time and

accelerated end-to-end model development. The ability to

automatically shift resources to high-priority tasks has increased

the team's productivity, allowing them to bring new generative AI

innovations to market faster.

Accelerate model development and deployment using popular AI

apps from AWS Partners within SageMaker

Many customers use best-in-class generative AI and ML model

development tools alongside SageMaker AI to conduct specialized

tasks, like tracking and managing experiments, evaluating model

quality, monitoring performance, and securing an AI application.

However, integrating popular AI applications into a team’s workflow

is a time-consuming, multi-step process. This includes searching

for the right solution, performing security and compliance

evaluations, monitoring data access across multiple tools,

provisioning and managing the necessary infrastructure, building

data integrations, and verifying adherence to governance

requirements. Now, AWS is making it easier for customers to combine

the power of specialized AI apps with the managed capabilities and

security of Amazon SageMaker. This new capability removes the

friction and heavy lifting for customers by making it easy to

discover, deploy, and use best-in-class generative AI and ML

development applications from leading partners, including Comet,

Deepchecks, Fiddler, and Lakera Guard, directly within

SageMaker.

SageMaker is the first service to offer a curated set of fully

managed and secure partner applications for a range of generative

AI and ML development tasks. This gives customers even greater

flexibility and control when building, training, and deploying

models, while reducing the time to onboard AI apps from months to

weeks. Each partner app is fully managed by SageMaker, so customers

do not have to worry about setting up the application or

continuously monitoring to ensure there is enough capacity. By

making these applications accessible directly within SageMaker,

customers no longer need to move data out of their secure AWS

environment, and they can reduce the time spent toggling between

interfaces. To get started, customers simply browse the Amazon

SageMaker Partner AI apps catalog, learning about the features,

user experience, and pricing of the apps they want to use. They can

then easily select and deploy the applications, managing access for

the entire team using AWS Identity and Access Management (IAM).

Amazon SageMaker also plays a pivotal role in the development

and operation of Ping Identity’s homegrown AI and ML

infrastructure. With partner AI apps in SageMaker, Ping Identity

will be able to deliver faster, more effective ML-powered

functionality to their customers as a private, fully managed

service, supporting their strict security and privacy requirements

while reducing operational overhead.

All of the new SageMaker innovations are generally available to

customers today.

To learn more, visit:

- The AWS Blog for details on today’s announcements: HyperPod

flexible training plans, HyperPod task governance, and AI apps from

partners in SageMaker.

- The Amazon SageMaker AI page to learn more about the

capabilities.

- The Amazon SageMaker customer page to learn how companies are

using Amazon Bedrock.

- The AWS re:Invent page for more details on everything happening

at AWS re:Invent.

About Amazon Web Services

Since 2006, Amazon Web Services has been the world’s most

comprehensive and broadly adopted cloud. AWS has been continually

expanding its services to support virtually any workload, and it

now has more than 240 fully featured services for compute, storage,

databases, networking, analytics, machine learning and artificial

intelligence (AI), Internet of Things (IoT), mobile, security,

hybrid, media, and application development, deployment, and

management from 108 Availability Zones within 34 geographic

regions, with announced plans for 18 more Availability Zones and

six more AWS Regions in Mexico, New Zealand, the Kingdom of Saudi

Arabia, Taiwan, Thailand, and the AWS European Sovereign Cloud.

Millions of customers—including the fastest-growing startups,

largest enterprises, and leading government agencies—trust AWS to

power their infrastructure, become more agile, and lower costs. To

learn more about AWS, visit aws.amazon.com.

About Amazon

Amazon is guided by four principles: customer obsession rather

than competitor focus, passion for invention, commitment to

operational excellence, and long-term thinking. Amazon strives to

be Earth’s Most Customer-Centric Company, Earth’s Best Employer,

and Earth’s Safest Place to Work. Customer reviews, 1-Click

shopping, personalized recommendations, Prime, Fulfillment by

Amazon, AWS, Kindle Direct Publishing, Kindle, Career Choice, Fire

tablets, Fire TV, Amazon Echo, Alexa, Just Walk Out technology,

Amazon Studios, and The Climate Pledge are some of the things

pioneered by Amazon. For more information, visit amazon.com/about

and follow @AmazonNews.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20241204660610/en/

Amazon.com, Inc. Media Hotline Amazon-pr@amazon.com

www.amazon.com/pr

Amazon.com (NASDAQ:AMZN)

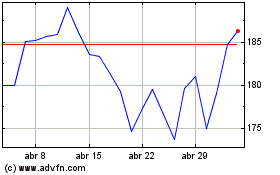

Gráfico Histórico do Ativo

De Nov 2024 até Dez 2024

Amazon.com (NASDAQ:AMZN)

Gráfico Histórico do Ativo

De Dez 2023 até Dez 2024